What is DFS?

The Distributed File System (DFS) is introduced by Microsoft Corporation with Windows NT operating system. The product is focused for addressing issues faces in File/Data sharing on networks environment.

After Windows NT, with every network operating systems that Microsoft had released were having DFS service by default. This service does not need any additional license apart from windows server operating system licenses.

To explain what is DFS in real world let’s look in to simple scenario. Let’s say you are starting a business and you have 2 computers to help operating the business. It can be used for generate invoices, keep stocks details, research details etc. you may need to share these information between the two computers. So we can create simple network and share the files from one computer to another using file share techniques.

As business grows the company may add more and more computers in to the network and requirement of sharing data will increase. So still we can implement active directory environment to control the resources properly with advance security features. We can implement File Severs to share files in the network as required.

But what will happen if you opening different branches in different cities or countries? Or accruing new companies which have different active directory setups? Well still you need to share files between offices, locations but now there are many different issues we will be facing. The branches may have connected using slow leased lines or VPN links. If users need to access files which is shared on headquarters then need to use this low speed link to access them. It can take ages to get file due to the bandwidth. Imagine what will happen 100 of users trying to access same share? It will definitely effect on company operations. Also let’s say all the files are saved on one central file server in HQ. Server is using 100mb connection to connect with network. If in given time if it had to answer thousands of requests to copy data, update data will it be able to handle? It will probably max out its 100mb link and slow down all the request processing. Also what will happen if its host business critical info and it went down during working hours? So we do have availability and reliability issues also with typical file share. And of cause no way to load balance using different servers as there will be practical issues to keep every server with up to date replicated data.

So now we can see we cannot apply typical file sharing mechanism to every file sharing requirement so what we can do? Well that’s where the DFS comes to the picture. DFS is developed to address all these kind major issues associated with file/data sharing in advanced / complicated network.

Why we need DFS?

As we discussed above the typical file sharing methods do have issues applying in to complex/ advanced network setups. Let’s discuss about some of these common issues and why we should move in to advanced solution such as DFS.

|

Issue |

Description |

|

Availability |

One of the biggest concerns for using traditional file sharing system for large network is availibity issues. When use simple file sharing methods, mostly data will be shared from one file server or few. When network getting larger demand of the data available in these file servers will increasing. May be the business critical information may share through these. If the server fails you will not be able to access it until it bring up again. May be will have to wait until backup is restored. For business it will be critical issues some time it may stop entire company operations. Of cause there can be disaster recovery plan in place but still there can be down time. Availability of the data is more important for any business as majority of business deals with digital data. Using traditional file sharing can cause single point of failure. As the demand of data in network share goes up we must ensure the availability of it. |

|

Complexity of managing and also using |

When network getting larger data will be shared from different servers for different purposes. So to access the share you need to type \\serverA for example in run command to access the files shared from serverA. But assume if there are 10 servers or more in network which is sharing files, you still need to remember all these paths with ip addresses or hostnames. If its hundreds of server this is not practical at all. If we look in to this in system administrator’s end he/she will need to control all these shares with different permissions by log in to each server. But if there were method that users can use some kind of structure to access shares and also for administrator to manage these shares from central location it would have been more easy for everyone. |

|

Load sharing ( Load Balancing ) Issues |

When the network is getting bigger the number of users will be increase dramatically. So if you have file share server you may get hundreds or thousands requests to access files, update files etc. depending on the hardware, bandwidth link etc if the serving client numbers increased system will start to give performance issues. To address this we will need some sort of load balancing mechanism. But there are limitations on such process. To load balance the same data should be available in different servers and then need to distribute the access request among that group of servers. The issue is how we sync the data between all these servers in efficiency manner? Typically file sharing mechanism do not support for replication by default. If there is such proper replication method we can use it to configure the load balancing. |

|

Branch office / different sites practical issues with file sharing |

Some time as we discussed in previous some time the business may have Branch offices in different cities, countries etc. also some time it can be even from different active directory sites. But still those may require to share files among the braches, with headquarters etc. mostly these offices will be interconnect with using lease lines or vpn links and most of them will be slower links. So to copy a file from different branch file share can take a longer time. When the number of user request increase this will be slower and some time you may not even get the file as line bandwidth is maxed out. This is definitely effect on company operations. But if we can access same up to date file copy from a local office file share it is more fast. if files in HQ share folders can replicate with local office share folders it save the bandwidth also of the slow link. That way only branch office server will copy files through the link instead of hundreds of user requests. |

|

Storage issues |

When the volume of the data increase the storage requirement for file sharing will increase as well. Let’s say you have a file server which support maximum of 1TB of storage. With data volume increase the server is reaching in to its maximum storage. There for no way you can continue this server and add more storage to the same share folder from different server. For ex- if you have share called \\serverA\Data you cannot add storage from different server to same share name if its reached the storage limit. So you will need to move in to new server and move the data. But if there is way we can add storage easily to the share from different server instead going n to new server will avoid downtimes and other access issues. |

So with use of traditional system have many different limitations and issues. There for we need advance system to address these limitations and issues. DFS is the answer for it. In next section let’s see how DFS functions will help to address these.

DFS and It’s Features

As we know the DFS is to offer simplified access to shares with in a large/complex network by providing characteristics such as easy management for administrator for their file server infrastructure, load balancing, replication, high availability etc.

Let’s look in to some of these features of DFS in details.

DFS Name Space

Let’s take a large network which is hosting file shares from 10 different servers. To access the shares, users have to use UNC paths such as \\ServerA\Share , \\ServerB\Share etc. to access the different shares they hosts. If this shares are from different child domains or domains it make more complex as users then need to use it with FQDN, for ex- \\serverA.sprint.local\Share . At the end the access methods for the shares make it more complex for the users. Not only that for administrators, they will need to spend more time on mapping the network shares to users, modifying the login scripts etc. also what will happen if server name or the ip address that used to connect to server is changed ? Then it will need to be reported to all the staff about those changes and modify existing shares etc.

Also for a new employee, he/she can take longer time to get families with these complex file share access process. This also will impact with the user productivity as well as they may need to borrows different servers to find exact share because of complexity. So question is how we can fix this?

Well with DFS it has introduced a feature to use “Namespace” where you can map different folder targets in different servers to one particular share path. So you do not need to brows 10 servers to get the files you need. Instead of that you can access one namespace share. for ex- \\sprint.local\share

In above example that the main share name will be \\contoso\public and it have sub folders which is targeted to shares on different file sharing servers. The end users do not need to be worry about the different UNC paths of the file sharing servers any more. It’s a good security feature as well. When you access the share end user does not really know from where these files are severed from? It can be a server in HQ or from a branch in different city. Also lets say one of the file server crashed and with rebuilt it got a different FQDN or maybe you need to move it to a different child domain. That means the UNC path will be change to access it shares. If it’s been in normal procedure we need to inform all the employees about this change. But with DFS namespace no one even knows if there has been such change as its just matter of changing file targets in the back end.

There are two types of name space you can setup on DFS. Stand-alone name space is for non-domain environment. It will need one server to act as namespace server to server the name space to the users. Disadvantage is if this server down you will not be access the shares even file servers are up.

Domain based namespace is for active directory environment. The configuration will be saved on active directory and will not be relay on name space server.

Multiple Copies

Let’s assume you host some business critical data in a share on head office file sharing server. The branch offices in also will need to access the files in this share. Due to the slow links between HQ and the branch offices in different cities it will really slow down the access from branch offices to the data. But what if the same files can be server from share in branch office? It will definitely help on the performance.

Using DFS you can serve same data from different servers. Which means one folder share will have multiple targets which host same data. For ex- let’s say you have folder called \\sprint.local\share setup on using domain based DFS name space and it’s targeted the share on ServerA. But you can set to serve same data from ServerB in branch office. If users in branch try to access the \\sprint.local\share automatically they will be served from the copy hosted on ServerB which is in there branch office. Users will not see different where its serve from except for much better performance.

When you use the domain-based name space with this kind of multiple targets setup, when someone try to access share it automatically select what is the closest target he can access from. This works as the same way as active directory used to select the closest active directory server which users can serve with.

This is also important for the high availability of the data. If a share have multiple targets setup it’s doesn’t matter if one targets is goes down. The files will be still available from the targets which are online.

Replication

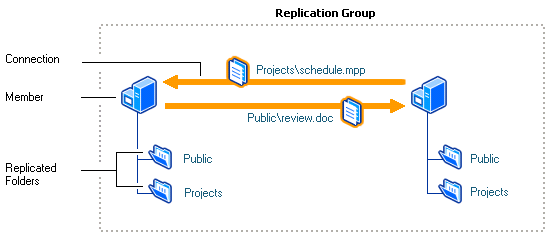

Well if we use multiple targets like above how really we keep same accurate data between all these shares? DFS do have solution for that. In DFS you can create replication group where you can define many settings such as who will be the replication members, replication topology, and replication schedule to control the bandwidth usage etc. so you really do not need to worry about copying the files manually to keep copies. Not only that even the replications can copy the ACL (Access Control List) changes as well. so if you apply access permissions on one share in replication group it can replicate in to other shares in the group. So as administrator you do not need to apply same access shares in every server.

In the illustration it shows how the replication is happens. Once the file is updated with in the replication group it will check for the replication schedule and the replication settings and do the replication automatically.

This method we can use to build backup servers for disaster recovery as well. We can have backup server add to the replication and let it replicate the file changes according a schedule. Then if any disaster if primary server is down we can let backup server to server the file with minimum configuration change.

Load Balancing

This feature is very similar to the multiple copies scenario we discussed. Let’s assume you have shared called \\sprint.local\Share in DFS which is accessed by large number of clients. When the number of clients increases the load it will make on bandwidth and server resources will be high. To avoid this performance issues with DFS we can add different servers to host same data using replication and let them to serve the clients. The data on shares will be identical and the users will not notice where it is serve from. If we have 3 servers serverA, serverB, serverC which will host same data with this method one user will get same data from serverA while another user get same data from serverC. This will distribute the load among the hosts and improve the performance of the file sharing process.

Resource Management

With the DFS the management of the resources is much easy than regular file sharing process. Let’s assume you have a file share which is need to rebuild due to disk errors. If it’s in regular file sharing process you need to disable the shares, then move files over to different server and then apply the same sharing permissions. Then inform the users if any path changes. If you made any mistake with copying permissions then you will also get complains from users and you need to spend time fixing those. There for the process is very lengthy and complicated. But if it’s been DFS you can simply replicate the data and permissions to the new server and then add it as multiple targets and remove the old target from the list. So that way users not even notices and most of time there will be no downtime at all to the shares. This is big relief for administrators to manage the resources in very efficient manner.

DFS, Live in Action

In previous section I have explained the main features of the DFS and the use of it. But how really we can use these features in a network?

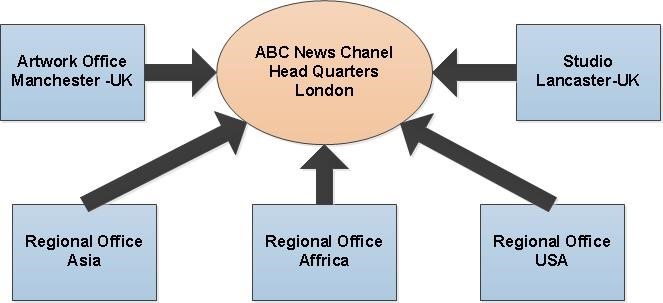

To explain it I have used a scenario of an international news networks called “ABC News Chanel”. It’s broadcasting their news worldwide. It does have 2 main regional offices in Asia, Africa and USA. Writers, reporters around the world will submit their reports, news to nearest regional office. The Art office is located on the Manchester city in UK and the cannel studio will be location on the Lancaster city UK. The cannel headquarter is location on London city in UK.

All the regional offices and city offices are connected with the headquarters to make one large network. They use many different ISPs, connection types to connect with the same network.

File Sharing Process

The company is using DFS services to manage its infrastructure file sharing requirements. The company does have DFS domain based namespace configured as \\abcnews.local\fileshares . All other share folders will be listed under this name space. Company headquarters have the main file share servers hosted and each regional offices, city offices do have their own file servers to help with the company operations.

To describe how they have setup the file sharing using DFS I will use simple practical scenario.

Let’s assume we do have reporter in sri lanka for the channel. He does have some news reports and video, audio files to send for the new channel to process with the news. So he will be connecting to the network of nearest regional office which is asia office located in india. He will be connecting from his laptop and will use VPN link to connect with the company network. Then he will be saving his files in to share called \\abcnews.local\fileshares\asia_office , this file shared is created by the IT department in HQ and mapped the “asia_office” folder targets to the file server located in the asia regional office. Even it’s used the company main DFS name space it’s connecting to a server in India office. Once he saves files the copy will be actually in regional office server. But for the process until it goes to news studio, reviewing panel in HQ need to access them, also art work office in Manchester need to access it for editing etc., but what will happen if they try to get it from asia regional office ? well it will be slow as these file transfers will go through the slow links between offices.to address this the same share will be replicate to the other city offices, headquarters so someone in art office or HQ try to access \\abcnews.local\fileshares\asia_office the content will be serve from their office file servers which will be faster. But the reporter or any other employer do not need to worry about different servers, UNC paths the process deals with; it is control by the DFS.

Once the news is approved by the HQ it can be copied to different shared folder and it will be access by art office. But it will also use the same mechanism used in above. At the end if someone trying to access a file shares, which is originated from different office automatically it will be serve by nearest replication server than going in to remote office file share.

Central Backup

Backup of data is critical for any business. But especially a company likes abcnews it’s more valued. When dealing with data in different regional offices, city offices the backup process will be more complex. If you go to backup data within the each office you need new hardware, licenses, as well as human resources to manage them. To simplify this instead of go for office level backup, all the file share data in offices will be replicated to backup servers in HQ. For this process will be using DFS replication mechanism. To use it will not need any additional cost for hardware, licenses etc. if need we can back up the replicated data in to tapes drives or NAS for more protection. But all these will happened only from HQ. If there is any data need to be restored we can simply replicate those files in to office shares. We always can control which way the replication happens. We can decide it’s from branch to HQ or HQ to Branch as per requirement.

High Availability

As a news channel the availablity of the data which deliver through the network is operation critical. Delays of getting news, reports, will bring the company ratings down. As we know it operates the offices in different countries as well as in different cities. Those are connected to HQ through different ISP and different connection speeds.

Let’s assume from Canada a reporter needs to submit a news report to HQ. So as usual he connect using VPN link to regional office in India. But that time from India office to HQ the leased line connection is down. If you trying to save the files to a share \\abcnews.local\fileshares\asia_office and if this were directly served from HQ you will not be able to connect to it as link is down. But if this has been in a replication group even the link is down you will be redirect to the file share in Indian office. As a user you will not see the different. Once the uplink established again this data will be replicated with the other replicate member in the group. So DFS is ensuring the availability of the data.

Also as administrator some time you need to do change the servers with new hardware. If it’s been a just a common file server you need to make notice to users, then disable shares, move to new server and apply share permissions. Then map the new shares etc. a large network this is really time consuming and complex process. But with beauty of DFS you can simply remove the server from network and add the new server. Even that server gone down no one will notice it as the files still available in replicated servers. There for we can ensure high availability of data when use DFS service and its features.

In above I explained how the DFS service and features can applied to real business scenario. Again this is only used few major features of the DFS. But still there is lot features which can be used based on the scenario.